Authored by Matthew J Lennon MD, Grant Rigney MSc, Zoltán Molnár MD, DPhil

Self-experimentation has shaped the history of neurological research1, from Isaac Newton mapping out the visual distribution of the retina by inserting a needle into his eye socket, to Henry Head distinguishing between types of somatic sensation by transecting branches of his own radial nerves.

a new compromise of individual deference to institutions and institutional liability for and support of individuals will allow self-experimentation to make progress on intractable problems

As the pace of medical discovery increases, the gap between what is possible and what is regulated is expanding.

Self-experimentation is on the rise, and a community of “neuro-hackers” are attempting to augment neurological function and treat disease through unproven methods ranging from biochemical supplementation to transcranial direct current stimulation to genetic alteration2.

Individual self-experimenters and large research institutions have natural incentives to eschew collaboration.

Self-experimenters are driven to their approach because they believe regulation impedes potentially paradigm-shifting experimentation. Conversely, institutions fear the liability they may bear by supporting these unwonted procedures, which has created a legal and regulatory vacuum in the field of self-experimentation.

In this viewpoint, we explore the legal and regulatory frameworks of self-experimentation and, particularly in light of recent neurological experimentation, argue that a new compromise of individual deference to institutions and institutional liability for and support of individuals will allow self-experimentation to make progress on intractable problems.

From the foundation of modern research ethics, self-experimentation has remained relatively unregulated. The Nuremberg code is the bedrock of modern human experimentation law and is a recognition that doctors and scientists committed atrocities during the 1930s and 40s that should never be repeated. It makes exceptions, however, for self-experimentation in Article 5:

“no experiment should be conducted if there is an a priori reason to believe that death or disabling injury will occur, except, perhaps, in experiments where the experimental physicians also serve as subjects”

This exemption was suggested by the prosecution and accepted by the judges because they saw it as necessary to stop Nazi medical researchers from pointing out that US military experiments – most famously the Walter Reed yellow fever experiments – risked lives of experimental subjects, including the researchers3.

This regulatory ambiguity of self-experimentation has continued, manifest in the dearth of laws or regulations worldwide. The National Institutes of Health (NIH) (USA), Institutes of Medicine (IoM) (USA), Medical Research Council (MRC) (UK) and the National Health and Medical Research Council (NHMRC) (AUS) have no specific policies or regulations around self-experimentation.

In a recent email survey of Institutional Review Boards4 (n = 37) in the Americas and Europe, two thirds of boards had no specific guidelines on self-experimentation.

Interestingly however when asked if they would require an ethics review for self-experimentation protocols, two thirds said they would, despite many of them not holding guidelines pertaining to them. A number of ethics committees were concerned with preventing driven researchers from excessively harming themselves. One required a surrogate investigator to obtain informed consent if the research method was sufficiently risky. Another ethics office thought that n-of-1 experiments were not meaningful.

The boards who did not require an ethics application generally stated that self-experimentation procedures require no regulatory intervention because there is no corresponding legal guidance. In recent years a number of countries, including the UK, Australia and Canada have introduced Corporate Manslaughter laws that may hold a university or research institute liable in the event of serious harm or death of a self-experimenter. However, such laws have yet to be tested in court in this context.

Taken as a whole, it is clear that self-experimentation remains in a legal and regulatory limbo.

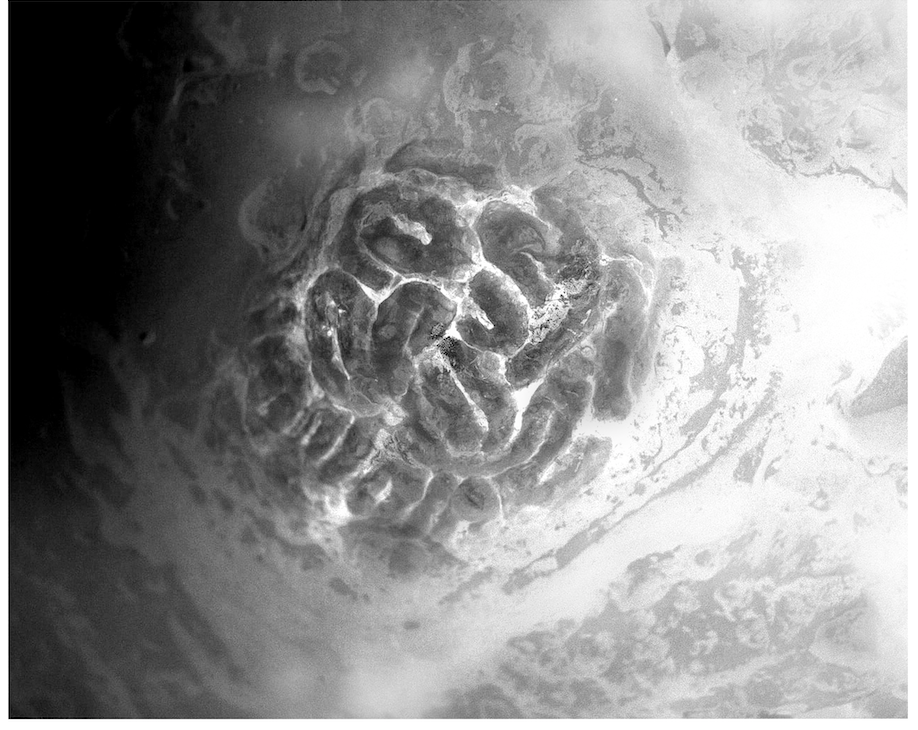

Phillip Kennedy (1948 – current) is the neurologist who invented the neurotropic electrode. That is an intracortical device that is comprised of a glass electrode covered in nerve growth factor connected to several electrically conductive gold wires 5.

In 1996 he, with neurosurgeon Rod Bakay, successfully implanted two of these electrodes into the hand motor area of a paralysed man, suffering from “locked-in” syndrome. He taught the patient to direct a computer cursor along a horizonal line allowing him to painstakingly tap out 3 characters per minute and thus communicate this thoughts6.

This was hailed as a ground-breaking success, the world’s “first cyborg”, the first successful brain-computer interface.

The outlook appeared promising but progress was not forthcoming, he attempted implants in a further three paralysed patients in 1999, 2002 and 2004 and either the patients did not surgically recover or they died. Kennedy ran into a number of other roadblocks; his grants were not renewed, his neurosurgical partner, Bakay, died and the FDA revoked his approval to insert the electrodes.

In 2014, after years of frustration Kennedy, who runs his own private lab Neural Signals, decided to have neurotrophic electrodes inserted into the speech centres of his own brain7. The surgery was performed in Belize, Central America, where he found willing surgeons and a less regulated medical system.

His recovery was complicated by serious cerebral oedema, expressive aphasia and a permanent facial droop. For around 2 months, he conducted experiments by himself in which he recorded cortical (the outer layer of the cerebrum associated with speech production) output with varying speech input as he tried to understand the neural code of speech.

Kennedy’s work typifies the motivations for self-experimentation, the potential for break-throughs, and the profound danger they pose to the researcher-subjects. He has presented his findings at conferences but has yet to formally publish them or make them publically accessible.

Brian Hanley (1957 – current) in 2015/’16 had a neuroendocrine hormone plasmid (Growth Hormone Releasing Hormone (GHRH)) injected via electroporation into his cells. Without institutional support, he organised for the plasmid to be developed independently. The first of two injections was completed without anaesthesia and was excruciatingly painful but was otherwise uncomplicated. Like Kennedy, he has not formally published his work, however he has announced a number of results on Reddit; testosterone increased 20%, leukocytes 16%, HDL 20% and healing time was reportedly also faster. Without insight into the actual protocol or a larger sample size, it is difficult to validate his results, but he claims, as many self-experimenters do, that his results demonstrate an excellent proof of concept that may pave the way for GHRH gene therapy.

By contrast, Kevin Warwick (1954 – current) ran a successful and well supported, self-experimenting machine-interface research program within the University of Reading. in 1998 was the first person worldwide to have a silicon microchip inserted under his skin. Similar to our modern-day contactless credit cards the microchip was used to activate computer-controlled devices in his proximity. Chasing his cyborgian desires, in 2002 he had a 100-electrode array inserted onto the median nerve of his left arm. He used the readout loop of impulses from his median nerve to successfully control a prosthetic arm in Reading, UK while he was in New York, US 8. After three months the electrode array had to be removed because fibrous tissue had grown around the electrodes interrupting electrical signal conductivity. These experiments laid critical foundations for the prosthetic limb technology we see today.

Self-experimentation provides researchers the ability to break boundaries and perform ‘world firsts’ as proof of concept studies.

These types of stories indicate a number of problems with the current approach to self-experimentation:

- Academic Journals have doubts about the ethical justification and methodological rigour of self-experimentation, as evinced by the direct feedback that has been given to scientists after they have submitted their papers9. For Kennedy and Hanley, the lack of publication will limit their future impact, diminishing the justification of the risks and costs they shouldered. Institutional recognition and approval will ameliorate these difficulties in publishing and thus help contextualize the risks and rewards.

- Self-experimenters lack the framework needed to perform self-studies in safe and well-controlled environments. Past self-experimenters’ methods have led some into greater harm than may have otherwise been encountered had an appropriate framework been in place. Institutional support will allow these experiments to be safer, more valid and reproducible.

- Self-Experimenters lack objectivity in assessing the justifications for the risks they are taking. Particularly for Kennedy, the risks were very significant and the findings have been of mixed significance. Institutional oversight will help mitigate the risks of self-experimentation while maximising the benefit.

Self-experimentation provides researchers the ability to break boundaries and perform “world firsts” as proof of concept studies. Before such study designs are incorporated into institutions and universities, it is critical that they recognise that self-experimenters can consent to much greater risk than regular research participants provided they are significantly more informed than a lay individual. The compromise between institutions and individual self-experimenters has the potential to break boundaries in neurological research. From gene editing to novel optogenetic (converting light to neuronal impulses) prostheses to single cell electrophysiological recordings, there is a panorama of possibilities, limited only by the human imagination and appetite for danger.

Image Credit: Anna du Toit (Creative Director)

References

1. Lenfest SM, Vaduva-Nemes A, Okun MS. Dr. Henry Head and lessons learned from his self-experiment on radial nerve transection: Historical vignette. J Neurosurg. 2011;114(2):529-533. doi:10.3171/2010.8.JNS10400

2. Zhang S. A Biohacker Regrets Publicly Injecting Himself With CRISPR. The Atlantic. https://www.theatlantic.com/science/archive/2018/02/biohacking-stunts-crispr/553511/. Published 2018. Accessed March 4, 2021.

3. Annas GJ. Self experimentation and the Nuremberg Code. BMJ. 2010;341:c7103. doi:10.1136/bmj.c7103

4. Hanley BP, Bains W, Church G. Review of Scientific Self-Experimentation: Ethics History, Regulation, Scenarios, and Views Among Ethics Committees and Prominent Scientists. Rejuvenation Res. 2018;22(1):31-42. doi:10.1089/rej.2018.2059

5. Kennedy PR, Bakay RAE. Activity of single action potentials in monkey motor cortex during long-term task learning. Brain Res. 1997;760(1):251-254. doi:https://doi.org/10.1016/S0006-8993(97)00051-6

6. Kennedy PR, Bakay RAE, Moore MM, Adams K, Goldwaithe J. Direct control of a computer from the human central nervous system. IEEE Trans Rehabil Eng. 2000;8(2):198-202. doi:10.1109/86.847815

7. Engber D. The Neurologist Who Hacked His Brain—And Almost Lost His Mind. WIRED. https://www.wired.com/2016/01/phil-kennedy-mind-control-computer/. Published 2016. Accessed October 12, 2020.

8. Warwick K, Gasson M, Hutt B, et al. The Application of Implant Technology for Cybernetic Systems. Arch Neurol. 2003;60(10):1369-1373. doi:10.1001/archneur.60.10.1369

9. COPE. The ethics of self-experimentation. Cases. https://publicationethics.org/case/ethics-self-experimentation. Published 2015. Accessed November 2, 2020.